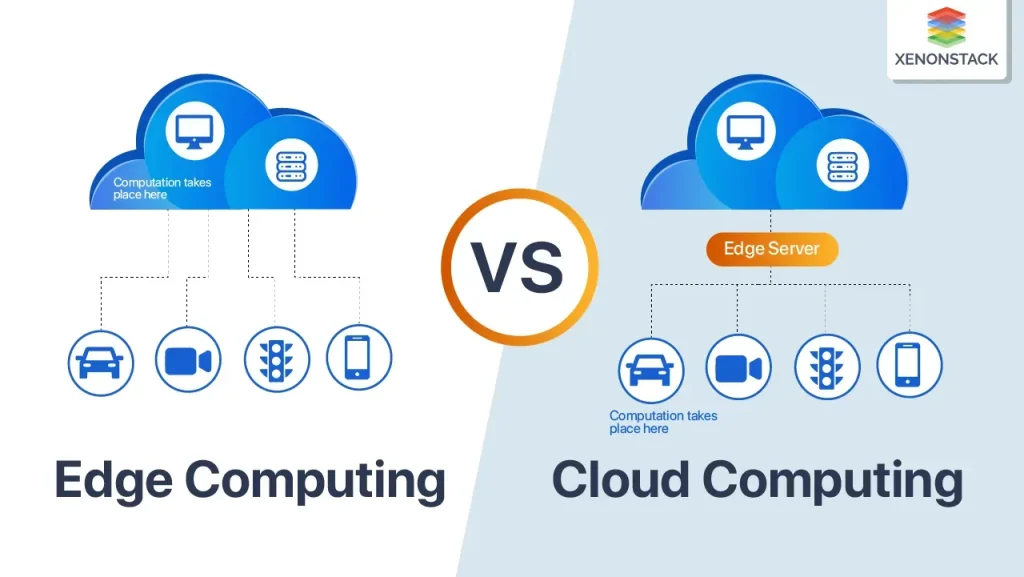

Cloud-to-Edge Computing brings together the cloud’s scale with edge resources to deliver real-time insights for modern enterprises. This blended approach unlocks edge computing benefits by moving processing closer to sensors and devices. Organizations can leverage a hybrid cloud infrastructure that combines on-premises data centers, regional edge nodes, and public cloud services. Edge AI deployment becomes practical when models are trained in the cloud but infer at the edge, reducing latency and bandwidth needs. A well-designed cloud edge architecture ensures security, data governance, and scalable orchestration across the entire continuum.

Another way to frame this shift is through the lens of distributed computing at the network edge, where data processing happens closer to devices and sensors. This edge-native approach, often described as edge intelligence or near-data processing, complements centralized cloud capabilities. In practice, organizations orchestrate a continuum—combining centralized governance with localized compute, storage, and analytics. By embracing a multi-tier architecture, teams can optimize latency, bandwidth, and data sovereignty while maintaining visibility and control. The result is a more resilient IT fabric that supports real-time decision-making across industrial, commercial, and consumer applications.

Cloud-to-Edge Computing: Real-Time Intelligence at the Edge

Cloud-to-Edge Computing blends the scalability of cloud services with the responsiveness of edge resources, delivering real-time insights where data is created. This approach relies on a cloud edge architecture that distributes workloads across on-premises data centers, regional edge nodes, and public clouds, unlocking edge computing benefits such as lower latency, data locality, and faster action. A well-designed hybrid cloud infrastructure orchestrates these resources to balance performance, cost, and governance across the edge and the cloud.

Operationally, cloud-to-edge computing enables selective data processing at the source and only periodically syncing with central repositories. This reduces bandwidth consumption, supports privacy and regulatory requirements, and improves resilience when network connectivity is intermittent. Edge AI deployment can run inference locally, while the cloud handles training, policy management, and global analytics, creating a scalable, secure, and auditable system through cloud-to-edge computing.

Edge AI Deployment in Cloud Edge Architecture: Building a Scalable Hybrid Cloud Infrastructure

Edge AI deployment places machine learning inference on devices, gateways, and regional nodes, enabling near-instant decisions and autonomous responses. When paired with cloud edge architecture, organizations keep sensitive data local, reduce data movement, and preserve bandwidth, while the cloud provides model training, versioning, and governance. This synergy is a core aspect of a scalable hybrid cloud infrastructure that supports continuous AI-enabled operations.

To scale edge AI deployment across an enterprise, teams must invest in interoperable orchestration, standardized data formats, and robust security. Using modular edge devices, open APIs, and clear data governance, enterprises can move from pilot projects to enterprise-wide deployments, measuring outcomes like latency, uptime, and total cost of ownership. The result is a resilient, intelligent ecosystem that leverages cloud edge architecture for centralized control and edge intelligence for local action.

Frequently Asked Questions

What is Cloud-to-Edge Computing and how do edge computing benefits fit into a hybrid cloud infrastructure?

Cloud-to-edge computing is a continuum that blends the cloud’s centralized control with edge resources, allowing workloads to move between central data centers and nearby edge nodes based on latency, privacy, and regulatory needs. Edge computing benefits include real-time insights, ultra-low latency, bandwidth savings, data locality, privacy, resilience, and cost efficiency achieved by processing data at or near the source. In a hybrid cloud infrastructure, workloads are orchestrated across on‑premises, regional edge, and public cloud resources using a cloud edge architecture that balances centralized governance with local autonomy. Key considerations include data governance and security across the edge-cloud boundary, plus edge AI deployment when real-time inference is required.

How does Cloud-to-Edge Computing enable edge AI deployment and shape cloud edge architecture for real-time analytics?

Cloud-to-edge computing enables edge AI deployment by moving models, inference, and intelligence closer to data sources, using edge devices and gateways with accelerators to run optimized models for real-time analytics. This approach reduces latency, lowers bandwidth usage, and enhances privacy while maintaining central governance and model updates in the cloud. Cloud edge architecture provides the patterns and routing—distributed workloads, data locality zones, and policy-driven orchestration—that ensure edge and cloud components work as a unified system. Organizations benefit from faster decision-making and scalable deployments across environments such as manufacturing, healthcare, and logistics.

| Aspect | Key Points |

|---|---|

| Evolution of Technology Infrastructure (Cloud to Edge) | Shifts from centralized data centers to distributed, intelligent networks. Cloud-to-Edge Computing blends cloud scalability with edge locality, creating a spectrum rather than a binary choice. |

| What Cloud-to-Edge Computing Means | An architectural mindset that combines the cloud’s centralized control and analytics with low-latency, data-local edge resources. Workloads migrate along a continuum based on speed, privacy, and regulatory requirements. |

| Benefits | Real-time insights at the edge; latency reduction; bandwidth efficiency; privacy/compliance through data locality; operational resilience; potential cost optimization with hybrid resources; faster innovation via edge AI deployment. |

| Key Components | Hybrid cloud infrastructure; Cloud edge architecture; Edge devices and gateways; Orchestration and management; Data governance and security; Edge AI deployment. |

| Architectural Patterns | Distributed cloud; Fog computing; Multi-access Edge Computing (MEC); Data locality zones. |

| Use Cases Across Industries | Manufacturing/Industrial IoT; Healthcare; Retail and logistics; Smart cities; Autonomous systems. |

| Challenges and Best Practices | Security/threat modeling; Data governance/compliance; Interoperability; Management/observability; Skills and culture. |

| Roadmap to Implementation | Assessment and strategy; Pilot projects; Scale and harmonize; Continual optimization. |

| The Human Factor | People and cross-functional teams; ongoing training; governance; shared tooling. |

Summary

Cloud-to-Edge Computing represents a natural evolution in technology infrastructure, uniting the centralized power of the cloud with the immediacy of edge resources to deliver faster insights and smarter automation. By blending on-site and near-site processing with scalable cloud capabilities, organizations achieve lower latency, improved data locality, and greater resilience. To realize this potential, pursue a hybrid cloud infrastructure, implement cloud edge architecture that supports governance and local autonomy, and embrace edge AI deployment at the point of need. As industries digitize and workloads demand tighter latency and stronger data privacy, the cloud-to-edge continuum will empower real-time decision-making while preserving control and visibility across the entire stack.