Edge Computing and Software reshapes how modern applications are built, deployed, and maintained, bringing computation closer to where data is produced and enabling more responsive experiences. For developers, this shift prompts a rethinking of architecture patterns, data flows, security models, and testing practices across distributed environments. Edge AI applications enable on-device inference and local data processing, reducing bandwidth needs and improving privacy for user interactions. This evolution pushes software design toward modularity, lightweight orchestration, and resilient deployment across devices, gateways, and edge servers. As teams explore edge-native tooling, governance, and observable operations, they balance local autonomy with centralized visibility to keep updates safe and scalable.

Viewed through the lens of distributed computing at the network edge, the same idea emphasizes processing data close to sources rather than defaulting to a central data center. Near-data processing, fog computing, and on-device analytics describe the same shift toward local intelligence that complements cloud capabilities. This language helps developers reason about data locality, synchronization, and security in ways that map to real-world deployments. Using these terms supports an LS I-inspired approach to content and understanding, improving searchability and cross-team collaboration.

Edge Computing and Software: Architecting resilient applications at the edge

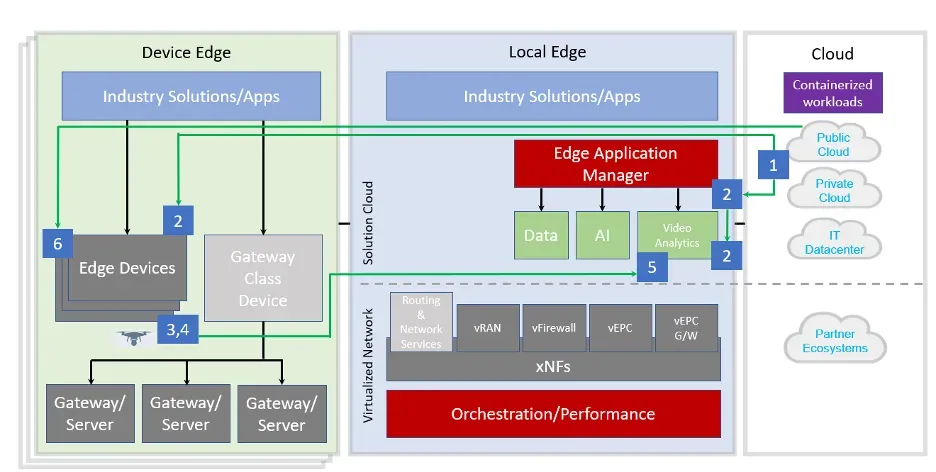

Edge Computing and Software describes the practical fusion of computation with the physical edge, where software runs on resource‑constrained devices, gateways, and regional servers while being orchestrated from central platforms. For developers, this means embracing edge computing for developers patterns that prioritize modularity, lightweight orchestration, and containerization to keep latency low and updates rapid. It also highlights software architecture at the edge as a discipline—designing services that can operate with limited CPU and memory, tolerate intermittent connectivity, and synchronize state when the network returns.

Architecting for the edge often involves a layered data flow: local processing near data sources, followed by selective cloud involvement. In practice, this translates to edge cloud integration that coordinates governance, training data, and centralized analytics while preserving local autonomy. IoT edge computing becomes a core consideration as sensors, devices, and gateways generate streams that must be filtered, enriched, and acted upon locally before any upstream transmission. By focusing on data locality and resilient deployment models, developers can deliver faster responses and improved resilience without sacrificing governance or security.

Edge AI Applications and Observability: Delivering intelligent, low-latency experiences at the edge

Edge AI applications enable on-device inference and real-time analytics, reducing round-trips to the cloud and preserving privacy. Deploying models at the edge supports ultra-low latency decision-making, smarter sensor fusion, and context-aware experiences that scale to billions of devices. Successful edge AI requires careful model management—versioning, updates, and fallback strategies when devices go offline—while maintaining a coherent data model across distributed nodes.

To sustain reliability and operability, observability must span devices, gateways, and cloud components. Edge-centric DevOps involves CI/CD pipelines tailored for edge software, with staged rollouts, robust update mechanisms, and rollback strategies that protect service continuity. By instrumenting standardized traces, metrics, and logs across the edge and cloud boundaries, teams gain end-to-end visibility for performance, security, and data quality, enabling effective governance in IoT edge computing ecosystems and across edge cloud integration initiatives.

Frequently Asked Questions

What is Edge Computing and Software, and how does edge cloud integration benefit developers building edge applications?

Edge Computing and Software describes distributing computation and software toward the network edge—closer to data sources like sensors and devices—so processing happens on edge devices, gateways, and local servers while central platforms orchestrate and govern the system. Edge cloud integration enables the edge to stay connected to centralized models, data, and governance, delivering low latency and bandwidth savings without sacrificing analytics power. For edge computing for developers, this means you can run on-device inference, filter data locally, and push only valuable insights to the cloud, while maintaining a consistent data model and security posture. Key considerations include resource constraints, intermittent connectivity, data locality, security, and a unified observability strategy. Useful patterns include edge devices with local processing, edge gateways, and fog nodes, plus lightweight runtimes and containers to keep latency low and updates agile.

Which patterns and best practices support edge AI applications and software architecture at the edge within Edge Computing and Software?

Core patterns include a layered approach where edge devices perform local processing, gateways aggregate data, fog nodes provide intermediate compute, and cloud backends handle governance and training. For edge AI applications, prioritize on-device inference, model versioning, and resilient update or fallback strategies when connectivity is limited. Use lightweight containers or WASI-based runtimes for modular services at the edge, and edge cloud integration to update models and share improvements. Emphasize data shaping and privacy by processing at the source and transmitting only non-sensitive aggregates. Maintain observability across devices, gateways, and cloud with end-to-end metrics, logs, and traces. Best practices also include designing for offline operation, keeping services small and composable, enforcing secure boot and attestation, and planning lifecycle management with staged rollouts and robust testing—essential for IoT edge computing scenarios and a scalable software architecture at the edge.

| Topic | Key Points |

|---|---|

| Definition and scope |

|

| Why edge matters |

|

| Architecture and patterns |

|

| Practical considerations for the edge |

|

| Tools, languages, and patterns |

|

| Edge AI applications and software |

|

| Data management, security, and governance |

|

| Observability, reliability, and DevOps |

|

| Use cases across industries |

|

| Best practices for building at the edge |

|

| Future trends and watch |

|

Summary

Edge Computing and Software is redefining how software is designed, deployed, and operated by bringing computation closer to data sources and users. This shift enables lower latency, improved resilience, and stronger data locality, while offering new opportunities for on-device analytics, privacy, and bandwidth efficiency. Developers must adapt to distributed architectures, constrained resources, intermittent connectivity, and the need for robust observability and secure updates. The patterns involve edge devices, gateways, fog nodes, and cloud backends, enabling modular, containerized implementations that span environments while maintaining central governance. Practical guidance includes offline operation, data shaping at the edge, and edge-native CI/CD. Edge AI and on-device inference empower real-time decisions with reduced cloud dependency, but require careful model management and fallback strategies when offline. Security, data governance, and reliable updates are essential to protect the edge surface. By following best practices in testing, observability, lifecycle management, and cost considerations, teams can deliver reliable, scalable edge-enabled software. Embracing Edge Computing and Software means balancing local autonomy with central oversight to deliver applications that perform where users expect them most.